QSFP28 MicroMux expands 10 & 40 Gig faceplate capacity

Monday, April 4, 2016 at 8:42AM

Monday, April 4, 2016 at 8:42AM - ADVA Optical Networking's MicroMux aggregates lower rate 10 and 40 gigabit client signals in a pluggable QSFP28 module

- ADVA is also claiming an industry first in implementing the Open Optical Line System concept that is backed by Microsoft

The need for terabits of capacity to link Internet content providers’ mega-scale data centres has given rise to a new class of optical transport platform, known as data centre interconnect.

Source: ADVA Optical Networking

Source: ADVA Optical Networking

Such platforms are designed to be power efficient, compact and support a variety of client-side signal rates spanning 10, 40 and 100 gigabit. But this poses a challenge for design engineers as the front panel of such platforms can only fit so many lower-rate client-side signals. This can lead to the aggregate data fed to the platform falling short of its full line-side transport capability.

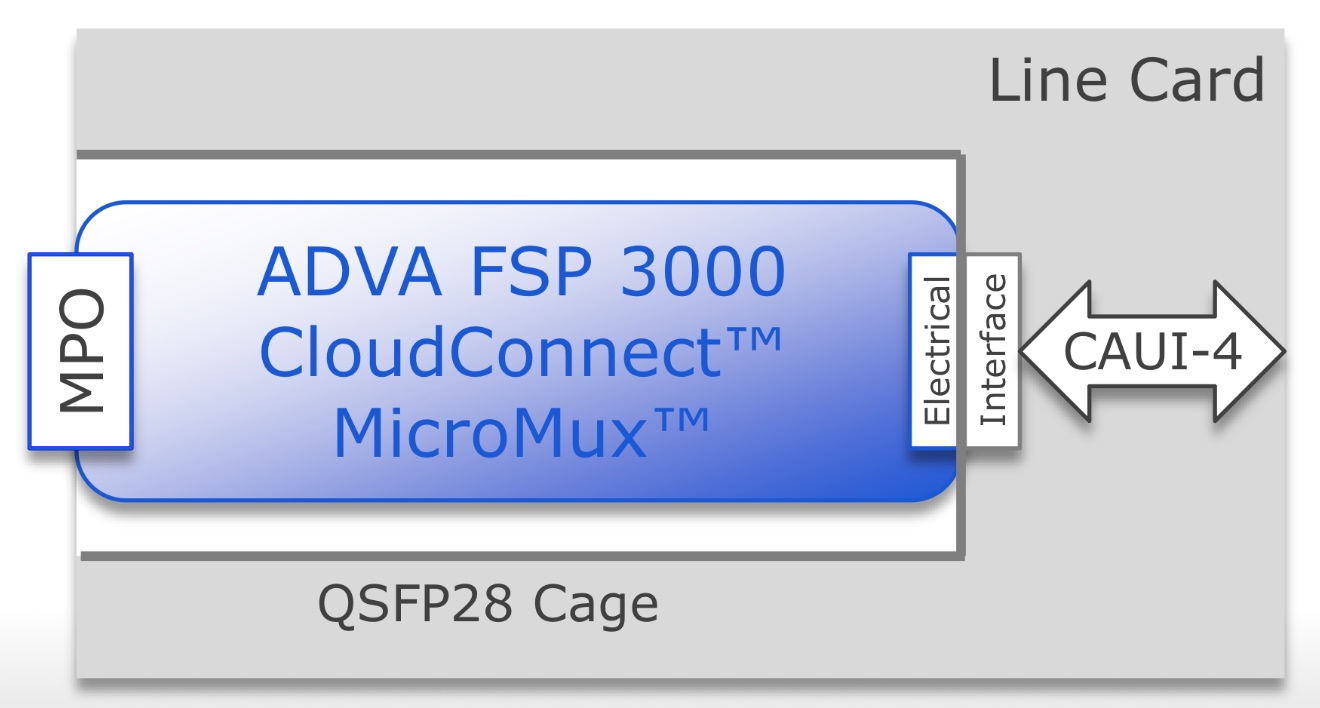

ADVA Optical Networking has tackled the problem by developing the MicroMux, a multiplexer placed within a QSFP28 module. The MicroMux module plugs into the front panel of the CloudConnect, ADVA’s data centre interconnect platform, and funnels either 10, 10-gigabit ports or two, 40-gigabit ports into a front panel’s 100-gigabit port.

"The MicroMux allows you to support legacy client rates without impacting the panel density of the product," says Jim Theodoras, vice president of global business development at ADVA Optical Networking.

Using the MicroMux, lower-speed client interfaces can be added to a higher-speed product without stranding line-side bandwidth. An alternative approach to avoid wasting capacity is to install a lower-speed platform, says Theodoras, but then you can't scale.

ADVA Optical Networking offers four MicroMux pluggables for its CloudConnect data centre interconnect platform: short-reach and long-reach 10-by-10 gigabit QSFP28s, and short-reach and intermediate-reach 2-by-40 gigabit QSFP28 modules.

The MicroMux features an MPO connector. For the 10-gigabit products, the MPO connector supports 20 fibres, while for the 40-gigabit products, it is four fibres. At the other end of the QSFP28, that plugs into the platform, sits a CAUI-4 4x25-gigabit electrical interface (see diagram above).

“The key thing is the CAUI-4 interface; this is what makes it all work," says Theodoras.

Inside the MicroMux, signals are converted between the optical and electrical domains while a gearbox IC translates between 10- or 40-gigabit signals and the CAUI-4 format.

Theodoras stresses that the 10-gigabit inputs are not the old 100 Gigabit Ethernet 10x10 MSA but independent 10 Gigabit Ethernet streams. "They can come from different routers, different ports and different timing domains," he says. "It is no different than if you had 10, 10 Gigabit Ethernet ports on the front face plate."

Using the pluggables, a 5-terabit CloudConnect configuration can support up to 520, 10 Gigabit Ethernet ports, according to ADVA Optical Networking.

The first products will be shipped in the third quarter to preferred customers that help in its development while the products will be generally available at the year-end.

ADVA Optical Networking unveiled the MicroMux at OFC 2016, held in Anaheim, California in March. ADVA also used the show to detail its Open Optical Line System demonstration with switch vendor, Arista Networks.

Two years after Microsoft first talked about the [Open Optical Line System] concept at OFC, here we are today fully supporting it

Open Optical Line System

The Open Optical Line System is a concept being promoted by the Internet content providers to afford them greater control of their optical networking requirements.

Data centre players typically update their servers and top-of-rack switches every three years yet the optical transport functions such as the amplifiers, multiplexers and ROADMs have an upgrade cycle closer to 15 years.

“When the transponding function is stuck in with something that is replaced every 15 years and they want to replace it every three years, there is a mismatch,” says Theodoras.

Data centre interconnect line cards can be replaced more frequently with newer cards while retaining the chassis. And the CloudConnect product is also designed such that its optical line shelf can take external wavelengths from other products by supporting the Open Optical Line System. This adds flexibility and is done in a way that matches the work practices of the data centre players.

“The key part of the Open Optical Line System is the software,” says Theodoras. “The software lets that optical line shelf be its own separate node; an individual network element.”

The data centre operator can then manage the standalone CloudConnect Open Optical Line System product. Such a product can take coloured wavelength inputs and even provide feedback with the source platform, so that the wavelength is tuned to the correct channel. “It’s an orchestration and a management level thing,” says Theodoras.

Arista recently added a coherent line card to its 7500 spine switch family.

The card supports six CFP2-ACOs that have a reach of up to 2,000km, sufficient for most data centre interconnect applications, says Theodoras. The 7500 also supports the layer-two MACsec security protocol. However, it does not support flexible modulation formats. The CloudConnect does, supporting 100-, 150- and 200-gigabit formats. CloudConnect also has a 3,000km reach.

Source: ADVA Optical Networking

Source: ADVA Optical Networking

In the Open Optical Line System demonstration, ADVA Optical Networking squeezed the Arista 100-gigabit wavelength into a narrower 37.5GHz channel, sandwiched between two 100 gigabit wavelengths from legacy equipment and two 200 gigabit (PM-16QAM) wavelengths from the CloudConnect Quadplex card. All five wavelengths were sent over a 2,000km link.

Implementing the Open Optical Line System expands a data centre manager’s options. A coherent card can be added to the Arista 7500 and wavelengths sent directly using the CFP2-ACOs, or wavelengths can be sent over more demanding links, or ones that requires greater spectral efficiency, by using the CloudConnect. The 7500 chassis could also be used solely for switching and its traffic routed to the CloudConnect platform for off-site transmission.

Spectral efficiency is important for the large-scale data centre players. “The data centre interconnect guys are fibre-poor; they typically only have a single fibre pair going around the country and that is their network,” says Theodoras.

The joint demo shows that the Open Optical Line System concept works, he says: “Two years after Microsoft first talked about the concept at OFC, here we are today fully supporting it.”

Reader Comments