Marvell plans for CXL's introduction in the data centre

Monday, July 4, 2022 at 10:57AM

Monday, July 4, 2022 at 10:57AM The open interconnect Compute Express Link (CXL) standard promises to change how data centre computing is architected.

CXL enables the rearrangement of processors (CPUs), accelerator chips, and memory within computer servers to boost efficiency.

Thad Omura

Thad Omura

"CXL is such an important technology that is in high focus today by all the major cloud hyperscalers and system OEMs," says Thad Omura, vice president of flash marketing at Marvell.

Semiconductor firm Marvell has strengthened its CXL expertise by acquiring Tanzanite Silicon Solutions.

Tanzanite was the first company to show two CPUs sharing common memory using a CXL 2.0 controller implemented using a field-programmable gate array (FPGA).

Marvell intends to use CXL across its portfolio of products.

Terms of the deal for the 40-staff Tanzanite acquisition have not been disclosed.

Data centre challenges

Memory chips are the biggest item spend in a data centre. Each server CPU has its own DRAM, the fast volatile memory overseen by a DRAM controller. When a CPU uses only part of the memory, the rest is inactive since other server processors can't access it.

"That's been a big issue in the industry; memory has consistently been tied to some sort of processor," says Omura.

Maximising processing performance is another issue. Memory input-output (I/O) performance is not increasing as fast as processing performance. Memory bandwidth available to a core has thus diminished as core count per CPU has increased. "These more powerful CPU cores are being starved of memory bandwidth," says Omura.

CXL tackles both issues: it enables memory to be pooled improving usage overall while new memory data paths are possible to feed the cores.

CXL also enables heterogeneous compute elements to share memory. For example, accelerator ICs such as graphic processing units (GPUs) working alongside the CPU on a workload.

CXL technology

CXL is an industry-standard protocol that uses the PCI Express (PCIe) bus as the physical layer. PCI Express is used widely in the data centre; PCIe 5.0 is coming to market, and the PCIe 6.0 standard, the first to use 4-level pulse-amplitude modulation (PAM-4), was completed earlier this year.

In contrast, other industry interface protocols such as OpenCAPI (open coherent accelerator processor interface) and CCIX (cache coherent interconnect for accelerators) use custom physical layers.

"The [PCIe] interface feeds are now fast enough to handle memory bandwidth and throughput, another reason why CXL makes sense today," says Omura.

CXL supports low-latency memory transactions in the tens of nanoseconds. In comparison, the non-volatile memory express storage (NVMe), which uses a protocol stack run on a CPU, has tens of microseconds transaction times.

"The CXL protocol stack is designed to be lightweight," says Omura. "It doesn't need to go through the whole operating system stack to get a transaction out."

CXL enables cache coherency, which is crucial since it ensures that the accelerator and the CPU see the same data in a multi-processing system.

Memory expansion

The first use of CXL will be to simplify the adding of memory.

A server must be opened when adding extra DRAM using a DIMM (dual in-line memory module). And there are only so many DIMM slots in a server.

The DIMM also has no mechanism to pass telemetry data such as its service and bit-error history. Cloud data centre operators use such data to oversee their infrastructure.

Using CXL, a memory expander module can be plugged into the front of the server via PCIe, avoiding having to open the server. System cooling is also more straightforward since the memory is far from the CPU. The memory expander's CXL controller can also send telemetry data.

CXL also boosts memory bandwidth. When adding a DIMM to a CPU, the original and added DIMM share the same channel; capacity is doubled but not the interface bandwidth. Using CXL however opens a channel as the added memory uses the PCIe bus.

"If you're using the by-16 ports on a PCIe generation five, it [the interface] exceeds the [DRAM] controller bandwidth," says Omura.

Source: Marvell

Source: Marvell

Pooled memory

CXL also enables memory pooling. A CPU can take memory from the pool for a task, and when completed, it releases the memory so that another CPU can use it. Future memory upgrades are then added to the pool, not an individual CPU. "That allows you to scale memory independently of the processors," says Omura.

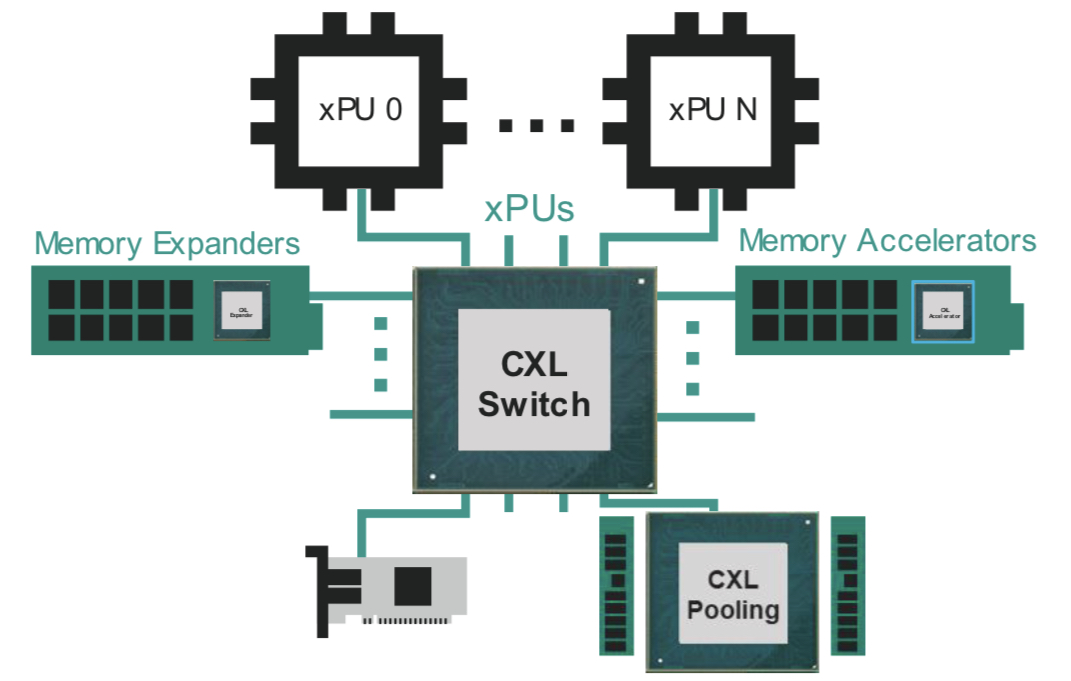

The likely next development is for all the CPUs to access memory via a CXL switch. Each CPU will no longer needs a local DRAM controller but rather it can access a memory expander or the memory pool using the CXL fabric (see diagram above).

Going through a CXL switch adds latency to the memory accesses. Marvell says that the round trip time for a CPU to access its local memory is about 100ns, while going through the CXL switch to pooled memory is projected to take 140-160ns.

The switch can also connect a CXL accelerator. Here, an accelerator IC is added to memory which can be shared in a cache coherent manner with the CPU through the switch fabric (see diagram above).

I/O acceleration hardware can also be added using the CXL switch. Such hardware includes Ethernet, data processing unit (DPU) smart network interface controllers (smartNICs), and solid-state drive (SSD) controllers.

"Here, you are focused on accelerating protocol-level processing between the network device or between the CPU and storage," says Omura. These I/O devices become composable using the CXL fabric.

More CXL, less Ethernet

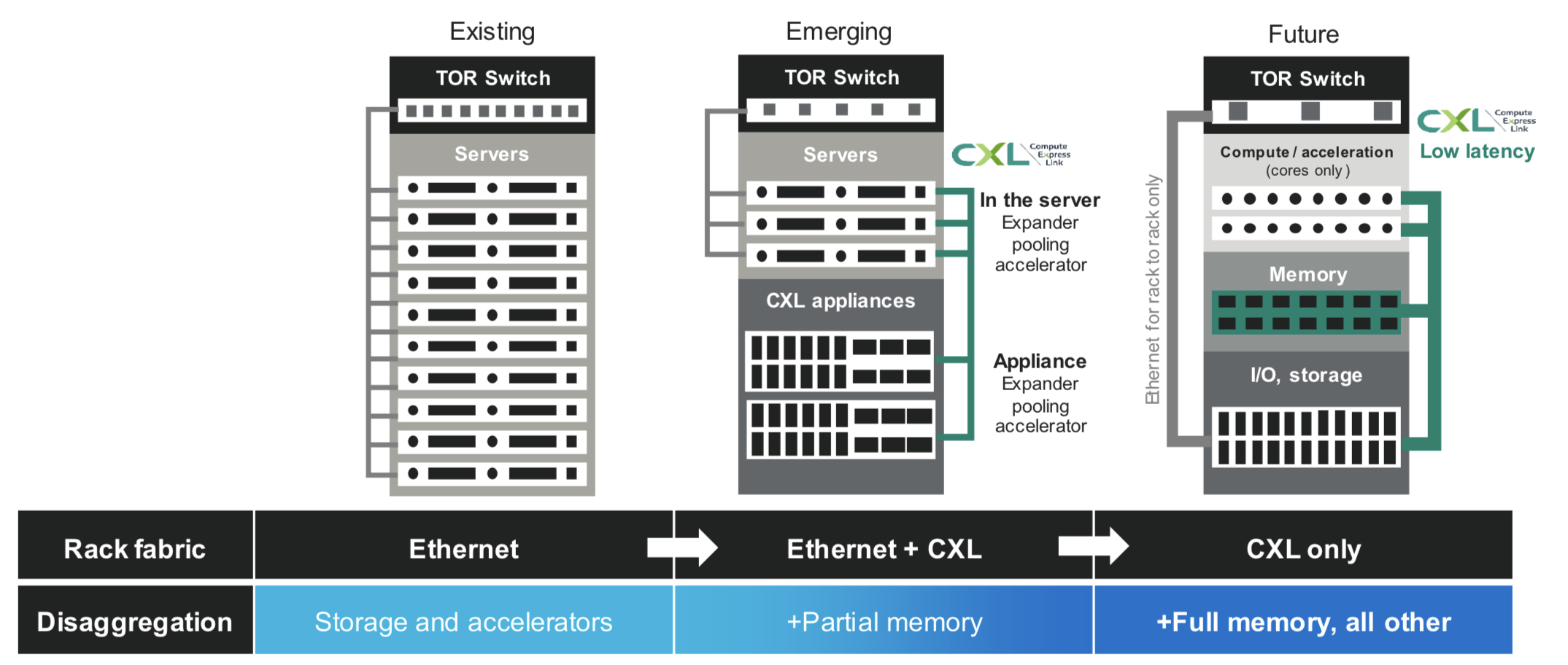

Server boxes in the data are stacked. Each server comprises CPUs, memory, accelerators, network devices and storage. The servers talk to each other via Ethernet and other server racks using a top-of-rack switch.

But the server architecture will change as CXL takes hold in the data centre.

Source: Marvell"As we add CXL into the infrastructure, for the first time, you're going to start to see disaggregate memory," says Omura. "You will be able to dynamically assign memory resources between servers."

Source: Marvell"As we add CXL into the infrastructure, for the first time, you're going to start to see disaggregate memory," says Omura. "You will be able to dynamically assign memory resources between servers."

For some time yet, servers will have dedicated memory. Eventually, however, the architecture will become disaggregated with separate compute, memory and I/O racks. Moreover, the interconnect between the boxes will be through CXL. "Some of the same technology that has been used to transmit high-speed Ethernet will also be used for CXL," says Omura.

Omura says deployment of the partially-disaggregated rack will start in 2024-25, while complete disaggregation will likely appear around the decade-end.

Co-packaged optics and CXL

Marvell says co-packaging optics will fit well with CXL.

Nigel Alvares

Nigel Alvares

"As you disaggregate memory from the CPU, there is a need to have electro-optics drive distance and bandwidth requirements going forward," says Nigel Alvares, vice president of solutions marketing at Marvell.

However, CXL must be justified from a cost and latency standpoint, limiting its equipment-connecting span.

"The distance in which you can transmit data over optics versus latency and cost is all being worked out right now," says Omura. The distance is determined by the transit time of light in fibre and the forward error correction scheme used.

But CXL needs to remain very low latency because memory transactions are being done over it, says Omura: "We're no longer fighting over just microseconds or milliseconds of networking, now we're fighting over nanoseconds."

Marvell can address such needs with its acquisition of Inphi and its PAM-4 and optical expertise, the adoption of PAM-4 encoding for PCIe 6.0, and now the addition of CXL technology.

Reader Comments