Nvidia's plans for the data processor unit

Tuesday, February 1, 2022 at 10:19AM

Tuesday, February 1, 2022 at 10:19AM When Nvidia’s CEO, Jensen Huang, discussed its latest 400-gigabit BlueField-3 data processing unit (DPU) at the company’s 2021 GTC event, he also detailed its successor.

Companies rarely discuss chip specifications two generations ahead; the BlueField-3 only begins sampling next quarter.

BlueField-3 die. Source: Nvidia

BlueField-3 die. Source: Nvidia

The BlueField-4 will advance Nvidia’s DPU family. It will double again the traffic throughput to 800 gigabits-per-second (Gbps) and almost quadruple the BlueField-3’s integer processing performance.

But one metric cited stood out. The BlueField-4 will increase by nearly 1000x the number of terabit operators-per-second (TOPS) performed: 1,000 TOPS compared to the BlueField-3’s 1.5 TOPS.

Huang said artificial intelligence (AI) technologies will be added to the BlueField-4, implying that the massively parallel hardware used for Nvidia’s graphics processor units (GPUs) are to be grafted onto its next-but-one DPU.

Why add AI acceleration? And will it change the DPU, a relatively new processor class?

Data processor units

Nvidia defines the DPU as a programmable device for networking.

The chip combines general-purpose processing - multiple RISC cores used for control-plane tasks and programmed in a high-level language - with accelerator units tailored for packet-processing data-plane tasks.

“The accelerators perform functions for software-defined networking, software-defined storage and software-defined security,” says Kevin Deierling, senior vice president of networking at Nvidia.

The DPU can be added to a Smart Network Interface Card (SmartNIC) that complements the server’s CPU, taking over the data-intensive tasks that would otherwise burden the server’s most valuable resource.

Other customers use the DPU as a standalone device. “There is no CPU in their systems,” says Deierling.

Storage platforms is one such example, what Deierling describes as a narrowly-defined workload. “They don't need a CPU and all its cores, what they need is the acceleration capabilities built into the DPU, and a relatively small amount of compute to perform the control-path operations,” says Deierling.

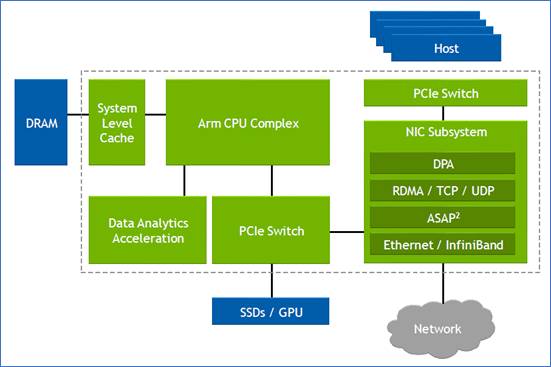

Since the DPU is the server’s networking gateway, it supports PCI Express (PCIe). The PCIe bus interfaces to the host CPU, to accelerators such as GPUs, and supports NVMe storage. NVMe is a non-volatile memory host controller interface specification.

BlueField 3

When announced in 2021, the 22-billion transistor BlueField-3 chip was scheduled to sample this quarter. “We need to get the silicon back and do some testing and validation before we are sampling,” says Deierling.

The device is a scaled-up version of the BlueField-2: it doubles the throughput to 400Gbps and includes more CPU cores: 16 Cortex-A78 64-bit ARM cores.

Nvidia deliberately chose not to use more powerful ARM cores. “The ARM is important, there is no doubt about it, and there are newer classes of ARM,” says Deierling. “We looked at the power and the performance benefits you’d get by moving to one of the newer classes and it doesn't buy us what we need.”

The BlueField-3 has the equivalent processing performance of 300 X86 CPU cores, says Nvidia, but this is due mainly to the accelerator units, not the ARM cores.

The BlueField-3 input-output [I/O] includes Nvidia’s ConnectX-7 networking unit that supports 400 Gigabit Ethernet (GbE) which can be split over 1, 2 or 4 ports. The DPU also doubles the InfiniBand interface compared to the BlueField-2, either a single 400Gbps (NDR) port or two 200Gbps (HDR) ports. There are also 32 lanes of PCI Express 5.0, each lane supporting 32 giga-transfers-per-second (GT/s) in each direction.

The memory interface is two DDR5 channels, doubling both the memory performance and the channel count of the BlueField-2.

The data path accelerator (DPA) of the BlueField-3 comprises 16 cores, each supporting 16 instruction threads. Typically, when a packet arrives, it is decrypted and the headers are inspected after which the accelerators are used. The threads are used if the specific function needed is not accelerated. Then, a packet is assigned to a thread and processed.

“The DPA is a specialised part of our acceleration core that is highlighly programmable,” says Deierling.

Other programmable logic blocks include the accelerated switching and packet processing (ASAP2) engine that parses packets. It inspects packet fields looking for a match that tells it what to do, such as dropping the packet or rewriting its header.

In-line acceleration

The BlueField-3 implements the important task of security.

A packet can have many fields and encapsulations. For example, the fields can include a TCP header, quality of service, a destination IP and an IP header. These can be encapsulated into an overlay such as VXLAN and further encapsulated into a UDP packet before being wrapped in an outer IP datagram that is encrypted and sent over the network. Then, only the IPSec header is exposed; the remaining fields are encrypted.

Deierling says the BlueField-3 does the packet encryption and decryption in-line.

For example, the DPU uses the in-line IPsec decode to expose the headers of the various virtual network interfaces - the overlays - of a received packet. Picking the required overlay, the packet is sent to a set of service-function chainings that use all the accelerators available such as tackling distributed denial-of-service and implementing a firewall and load balancing.

“You can do storage, you can do an overlay, receive-side scaling [RSS], checksums,” says Deierling. “All the accelerations built into the DPU become available.”

Without in-line processing, the received packet goes through a NIC and into the memory of the host CPU. There, it is encrypted and hence opaque; the packet’s fields can’t benefit from the various acceleration techniques. “It is already in memory when it is decrypted,” says Deierling.

The DPU and its functional units are shown within the dotted line, the host processor here is an x86 CPU. Source: Nvidia

The DPU and its functional units are shown within the dotted line, the host processor here is an x86 CPU. Source: Nvidia

Often, with the DPU, the received packet is decrypted and passed to the host CPU where the full packet is visible. Then, once the host application has processed the data, the data and packet may be encrypted again before being sent on.

“In a ‘zero-trust’ environment, there may be a requirement to re-encrypt the data before sending it onto the next hop,” says Deierling. “In this case, we just reverse the pipeline.”

An example is confidential healthcare information where data needs to be encrypted before being sent and stored.

DPU evolution

There are many application set to benefit from DPU hardware. These cover the many segments Nvidia is addressing including AI, virtual worlds, robotics, self-driving cars, 5G and healthcare.

All need networking, storage and security. “Those are the three things we do but it is software-defined and hardware-accelerated,” says Deierling.

Nvidia has an ambitious target of launching a new DPU every 18 months. That suggests the BlueField-4 could sample as early as the end of 2023.

The 800-gigabit Bluefield-4 will have 64-billion transistors and nearly quadruple the integer processing performance of the BlueField-3: from 42 to 160 SPECint.

Nvidia says its DPUs, including the BlueField-4, are evolutionary in how they scale the ARM cores, accelerators and throughput. However, the AI acceleration hardware added to the BlueField-4 will change the nature of the DPU.

“What is truly salient is that [1,000] TOPS number,” says Deierling. “And that is an AI acceleration; that is leveraging capabilities Nvidia has on the GPU side.”

Self-driving cars, 5G and robotics

An AI-assisted DPU will support such tasks as video analytics, 5G and robotics.

For self-driving cars, the DPU will reside in the data centre, not in the car. But that too will change.“Frankly, the car is becoming a data centre,” notes Deierling.

Deep learning currently takes place in the data centre but as the automotive industry adopts Ethernet, a car’s sensors - lidar, radar and cameras - will send massive amounts of data which an IC must comprehend.

This is relevant not just for automotive but all applications where data from multiple sensors needs to be understood.

Deierling describes Nvidia as an AI-on-5G company.

“We have a ton of different things that we are doing and for that, you need a ton of parallel-processing capabilities,” he says. This is why the BlueField-4 is massively expanding its TOPS rating.

He describes how a robot on an automated factory floor will eventually understand its human colleagues.

“It is going to recognise you as a human being,“ says Deierling. “You are going to tell it: ‘Hey, stand back, I’m coming in to look at this thing’, and the robot will need to respond in real-time.”

Video analytics, voice processing, and natural language processing are all needed while the device will also be running a 5G interface. Here, the DPU will reside in a small mobile box: the robot.

“Our view of 5G is thus more comprehensive than just a fast pipe that you can use with a virtual RAN [radio access network] and Open RAN,” says Deierling. “We are looking at integrating this [BlueField-4] into higher-level platforms.”

Reader Comments